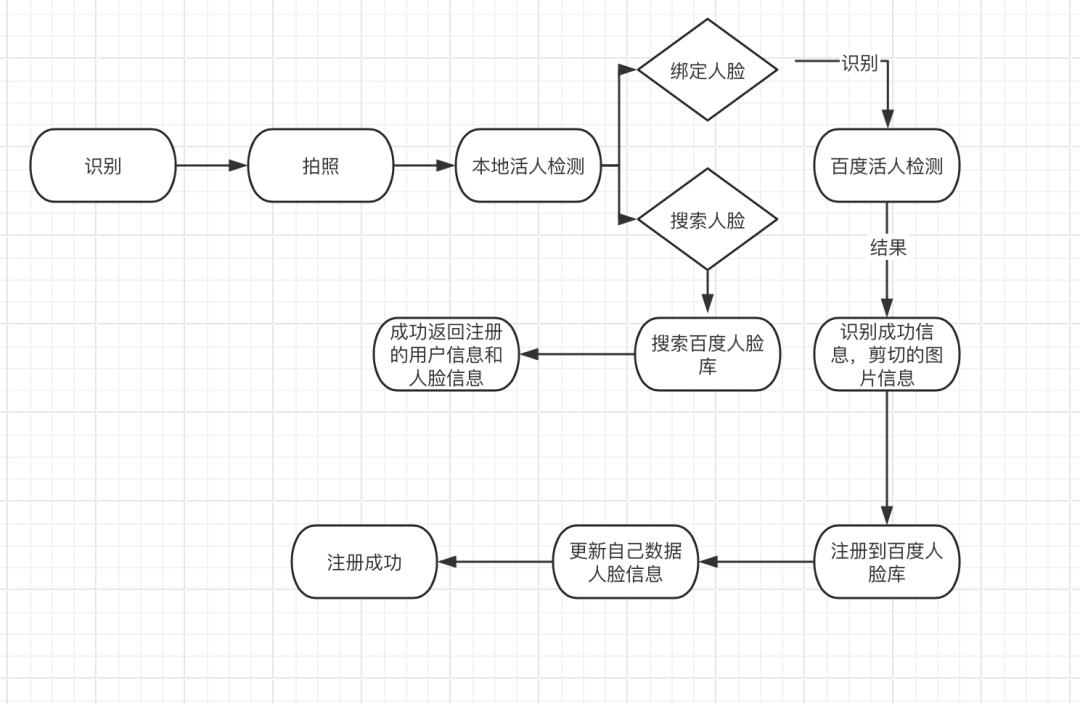

功能诉求是:采集人脸注册到百度人脸库并传入用户资料,并更新自己服务端人脸信息,用户再次采集人脸可以搜索百度人脸库用户资料,返回资料可以和自己服务器交互。我这里是客户端直接和百度交互,拿到信息再和自己服务器交互(省流),也可以直接后端直接对接。

首先加入 flutter 相机库

camera: ^0.10.5+9 #相机

///百度识别 start region

static String get accessTokenUrl => "https://aip.baidubce.com/oauth/2.0/token"; ///获取访问token

static String get faceverifyUrl => "https://aip.baidubce.com/rest/2.0/face/v4/faceverify"; ///在线图片活体V4

static String get addFace => "https://aip.baidubce.com/rest/2.0/face/v3/faceset/user/add"; ///添加人脸库

static String get updateFace => "https://aip.baidubce.com/rest/2.0/face/v3/faceset/user/update"; ///更新人脸库

static String get searchFace => "https://aip.baidubce.com/rest/2.0/face/v3/search"; ///人脸搜索

static String get delFace => "https://aip.baidubce.com/rest/2.0/face/v3/faceset/face/delete"; ///删除人脸

static String get delUser => "https://aip.baidubce.com/rest/2.0/face/v3/faceset/user/delete"; ///删除用户

///百度识别 end region1、人脸注册

先把我人脸识别的界面的源码贴上来

用到的百度接口有:

import 'dart:async';

import 'dart:convert';

import 'dart:io';

import 'package:bot_toast/bot_toast.dart';

import 'package:camera/camera.dart';

import 'package:flutter/material.dart';

import 'package:get/get_core/src/get_main.dart';

import 'package:get/get_navigation/get_navigation.dart';

import 'package:health_platform/bean/BaiduFaceLiveCheckResponse.dart';

import 'package:health_platform/config/radius.dart';

import 'package:health_platform/utils/LogUtils.dart';

import '../../config/fontsize.dart';

import '../../net/MvvmModel/BaiduFace.dart';

import '../../utils/BaiduFaceUtils.dart';

import '../../utils/img/ImageConvertUtils.dart';

import '../../widget/TextWidget.dart';

import '../BaseView.dart';

///人脸识别

class FaceRecognition extends StatefulWidget {

Function(BaiduFaceLiveCheckResponse)? faceSuccessCallback;

FaceRecognition({super.key, this.faceSuccessCallback});

@override

_FaceRecognitionState createState() => _FaceRecognitionState();

}

class _FaceRecognitionState extends BaseViewState<FaceRecognition>

with WidgetsBindingObserver, TickerProviderStateMixin {

List<CameraDescription>? _cameras;

late StreamSubscription<CameraImage> _streamSubscription;

CameraController? controller;

bool isRecognition = false; //是否识别中

enableAudio() => false; //是否开启语音

double _minAvailableZoom = 1.0;

double _maxAvailableZoom = 1.0;

double _currentScale = 1.0;

double _baseScale = 1.0;

String takePicPath = "";

CameraLensDirection _camera = CameraLensDirection.back;

// Counting pointers (number of user fingers on screen)

int _pointers = 0;

double imageDisplayWidth = 500;

double imageDisplayHeight = 720 / 1280 * 500;

@override

void initState() {

// TODO: implement initState

super.initState();

WidgetsBinding.instance.addObserver(this);

availableCameras().then((value) {

_cameras = value;

if (_cameras!.isNotEmpty) {

_camera = _cameras![0].lensDirection;

setState(() {});

onNewCameraSelected(_cameras![0]);

} else {

BotToast.showText(text: "没有找到摄像头");

}

});

}

// #docregion AppLifecycle

@override

void didChangeAppLifecycleState(AppLifecycleState state) {

final CameraController? cameraController = controller;

// App state changed before we got the chance to initialize.

if (cameraController == null || !cameraController.value.isInitialized) {

return;

}

if (state == AppLifecycleState.inactive) {

//不活动的

cameraController.dispose();

} else if (state == AppLifecycleState.resumed) {

//恢复的

_initializeCameraController(cameraController.description);

}

}

///初始化相机

void _initializeCameraController(CameraDescription cameraDescription) async {

final CameraController cameraController = CameraController(

cameraDescription,

ResolutionPreset.high,

enableAudio: enableAudio(),

imageFormatGroup: ImageFormatGroup.jpeg,

);

controller = cameraController;

// If the controller is updated then update the UI.

cameraController.addListener(() {

if (mounted) {

setState(() {});

}

if (cameraController.value.hasError) {

BotToast.showText(

text: "初始化摄像头失败:${cameraController.value.errorDescription}");

}

});

try {

await cameraController.initialize();

await Future.wait(<Future<Object?>>[

// The exposure mode is currently not supported on the web.

...<Future<Object?>>[

cameraController.getMinExposureOffset().then((double value) {

//最小曝光偏移

}),

cameraController.getMaxExposureOffset().then((double value) {

//最大曝光偏移

}),

],

cameraController.getMaxZoomLevel().then((double value) {

//最大缩放

}),

cameraController.getMinZoomLevel().then((double value) {

//最小缩放

}),

]);

///获取实时图片流 todo 无法获取

// await cameraController.startImageStream(

// (CameraImage image){

// // print("image size:[${image.width},${image.height}]");

// //String? base64String = ImageConvertUtils.convertImageToBase64ByFace(image);

// LogUtils.e("base64String:====");

// },

// );

} on CameraException catch (e) {

switch (e.code) {

case 'CameraAccessDenied':

BotToast.showText(text: "没有摄像头权限");

break;

default:

BotToast.showText(text: "初始化摄像头失败:${e.code}");

break;

}

}

if (mounted) {

setState(() {});

}

}

//新的相机选择后的实例化 选择相机

Future<void> onNewCameraSelected(CameraDescription cameraDescription) async {

if (controller != null) {

return controller!.setDescription(cameraDescription);

} else {

return _initializeCameraController(cameraDescription);

}

}

// #enddocregion AppLifecycle

//获取摄像头图标

IconData getCameraLensIcon(CameraLensDirection direction) {

switch (direction) {

case CameraLensDirection.back:

return Icons.camera_rear;

case CameraLensDirection.front:

return Icons.camera_front;

case CameraLensDirection.external:

return Icons.camera;

}

// This enum is from a different package, so a new value could be added at

// any time. The example should keep working if that happens.

// ignore: dead_code

return Icons.camera;

}

void _handleScaleStart(ScaleStartDetails details) {

_baseScale = _currentScale;

}

Future<void> _handleScaleUpdate(ScaleUpdateDetails details) async {

// When there are not exactly two fingers on screen don't scale

if (controller == null || _pointers != 2) {

return;

}

_currentScale = (_baseScale * details.scale)

.clamp(_minAvailableZoom, _maxAvailableZoom);

await controller!.setZoomLevel(_currentScale);

}

void onViewFinderTap(TapDownDetails details, BoxConstraints constraints) {

if (controller == null) {

return;

}

final CameraController cameraController = controller!;

final Offset offset = Offset(

details.localPosition.dx / constraints.maxWidth,

details.localPosition.dy / constraints.maxHeight,

);

cameraController.setExposurePoint(offset);

cameraController.setFocusPoint(offset);

}

@override

void dispose() {

controller?.dispose();

WidgetsBinding.instance.removeObserver(this);

super.dispose();

}

@override

Widget build(BuildContext context) {

late Widget cameraPreviewWidget;

if (controller == null || !controller!.value.isInitialized) {

cameraPreviewWidget = const Center(

child: CircularProgressIndicator(),

);

} else {

cameraPreviewWidget = Listener(

onPointerDown: (_) => _pointers++,

onPointerUp: (_) => _pointers--,

child: Center(

child: CameraPreview(

controller!,

child: LayoutBuilder(

builder: (BuildContext context, BoxConstraints constraints) {

return GestureDetector(

behavior: HitTestBehavior.opaque,

onScaleStart: _handleScaleStart,

onScaleUpdate: _handleScaleUpdate,

onTapDown: (TapDownDetails details) =>

onViewFinderTap(details, constraints),

);

}),

)),

);

}

return Scaffold(

resizeToAvoidBottomInset: false,

backgroundColor: Colors.black,

appBar: buildAppBar("人脸识别"),

body: SizedBox(

height: double.infinity,

width: double.infinity,

child: Stack(

children: [

cameraPreviewWidget, //相机预览

Container(

padding: const EdgeInsets.only(

top: 40, bottom: 0, left: 20, right: 20),

child: Row(

mainAxisAlignment: MainAxisAlignment.center,

children: [

Image.asset(

"images/face_rec.png",

fit: BoxFit.fill,

),

],

),

),

Positioned(

bottom: 20,

left: 20,

right: 20,

child: cText(context, '请将身份证(人像面)置于虚线框内,并确保图形清晰可见,然后按下识别键',

color: Colors.white,

fontSize: content_font_size,

fontWeight: FontWeight.w100,

textAlign: TextAlign.center),

),

Positioned(

bottom: 0,

right: 20,

top: 0,

child: Column(

mainAxisAlignment: MainAxisAlignment.center,

children: [

Container(

width: 80,

height: 80,

decoration: BoxDecoration(

color: Colors.white,

borderRadius: BorderRadius.circular(40),

),

child: isRecognition

? Center(

child: InkWell(

child: CircularProgressIndicator(),

onTap: () {

BotToast.showText(text: "识别中");

},

),

)

: IconButton(

icon: Image.asset(

"images/icon_face.png",

color: Colors.blue,

width: 40,

),

onPressed: takePicture,

))

],

),

),

if (_cameras != null &&

_cameras!.isNotEmpty &&

_cameras!.length > 1)

Positioned(

right: 20,

bottom: 20,

child: Container(

width: 80,

height: 80,

decoration: BoxDecoration(

color: Colors.black.withOpacity(0.5),

borderRadius: BorderRadius.circular(rect_radius),

),

child: IconButton(

icon: Icon(

getCameraLensIcon(_camera),

color: Colors.white,

size: 50,

),

onPressed: () {

if (_cameras!.isNotEmpty) {

onNewCameraSelected(

_camera == CameraLensDirection.front

? _cameras![0]

: _cameras![1]);

_camera = _camera == CameraLensDirection.front

? CameraLensDirection.back

: CameraLensDirection.front;

} else {

BotToast.showText(text: "没有找到摄像头");

}

},

),

)),

isRecognition

? Positioned(

child: Center(

child: Container(

width: imageDisplayWidth + 50,

height: imageDisplayHeight + 100,

decoration: BoxDecoration(

color: Colors.white,

borderRadius: BorderRadius.circular(rect_radius),

),

child: Column(

mainAxisAlignment: MainAxisAlignment.center,

children: [

Image.file(

File(takePicPath),

fit: BoxFit.fill,

width: imageDisplayWidth,

height: imageDisplayHeight,

),

const SizedBox(

height: blank_height_20,

),

Row(

crossAxisAlignment: CrossAxisAlignment.center,

mainAxisAlignment: MainAxisAlignment.center,

children: [

const SizedBox(

width: 20,

height: 20,

child: CircularProgressIndicator(),

),

const SizedBox(

width: 10,

),

cText(context, '人脸识别中,请稍后...',

color: Colors.black,

fontSize: content_font_size,

fontWeight: FontWeight.w100,

textAlign: TextAlign.center)

],

)

],

),

),

),

)

: const SizedBox()

],

),

),

);

}

//拍照

void takePicture() async {

if (controller == null || !controller!.value.isInitialized) {

return;

}

if (controller!.value.isTakingPicture) {

// A capture is already pending, do nothing.

return;

}

///识别中

if (isRecognition) return;

try {

await controller!.takePicture().then((value) async {

///检查是否有人脸信息

bool? hasFace = await BaiduFaceUtils.instance.checkFaceInfo(value.path);

LogUtils.e('hasFace:$hasFace ');

if (!(hasFace ?? false)) {

BotToast.showText(text: "无法识别人脸,请将人脸对准框内");

return;

}

setState(() {

takePicPath = value.path;

isRecognition = true;

});

LogUtils.e("拍照成功:${value.path}");

//BotToast.showText(text: "拍照成功:${value.path}");

uploadRecognition(value.path);

});

} on CameraException catch (e) {

BotToast.showText(text: "拍照失败:${e.code}");

setState(() {

isRecognition = false;

});

}

}

///上传到百度识别

void uploadRecognition(String path) async {

// BaiduFace.getAccessToken({}, (p0) {

// LogUtils.e("p0:$p0");

// }, error: (msg) {

// LogUtils.e("msg:$msg");

// });

Map<String, dynamic> params = {};

///文件转base64

String base64String = await ImageConvertUtils.fileImageToBase64(path);

if (base64String.isEmpty) {

BotToast.showText(text: "识别错误,请重试");

setState(() {

isRecognition = false;

});

return;

}

List<String> images = [base64String];

params['image_list'] = images;

BaiduFace.faceverify(params, (p0) {

Map<String, dynamic> result = p0.result as Map<String, dynamic>;

LogUtils.e("result:${result['face_liveness']}");

if ((result['face_liveness'] ?? 0) < 0.8) {

recognitionFaceFail();

return;

}

if (result['face_list'] == null ||

(result['face_list'] as List<dynamic>).isEmpty) {

recognitionFaceFail();

return;

}

List<dynamic> face_list = result['face_list'] as List<dynamic>;

Map<String, dynamic> face = face_list[0];

//人脸可信度

if ((face['face_probability'] ?? 0) < 0.8) {

recognitionFaceFail();

return;

}

//BotToast.showText(text: "识别成功");

Get.back();

p0.sig_image = base64String;

p0.originalImage = path;

if (widget.faceSuccessCallback != null) widget.faceSuccessCallback!(p0);

}, error: (msg) {

LogUtils.e("msg:$msg");

setState(() {

isRecognition = false;

});

});

}

///识别失败

void recognitionFaceFail() {

BotToast.showText(text: "识别失败,请重试");

setState(() {

isRecognition = false;

});

}

}

takePicture 拍照按钮,首先使用了android 自带的类 检查是否有人脸,以免造成不必要资源上传,产生百度接口费用。

这个需要flutter和原生对接(bool? hasFace = await BaiduFaceUtils.instance.checkFaceInfo(value.path);),代码如下:

///检查是否有人脸信息

public void checkFaceInfo(MethodCall call, Result _result) {

// 创建FaceDetector对象

String filePath = CommonUtil.getParam(call, _result, "path");

///文件转成bitmap

Bitmap bitmap = BitmapFactory.decodeFile(filePath);

//由于Android内存有限,图片太大的话,会出现无法加载图片的异常,图片的格式必须为Bitmap RGB565格式

Bitmap bitmapDetect = bitmap.copy(Bitmap.Config.RGB_565, true);

//Log.i(TAG, "getCutBitmap:---宽度-- "+bitmapDetect.getWidth());

//Log.i(TAG, "getCutBitmap:---高度-- "+bitmapDetect.getHeight());

// 设置你想检测的数量,数值越大错误率越高,所以需要置信度来判断,但有时候置信度也会出问题

FaceDetector faceDetector = new FaceDetector(bitmapDetect.getWidth(), bitmapDetect.getHeight(), 1);

// 返回找到图片中人脸的数量,同时把返回的脸部位置信息放到faceArray中,过程耗时,图片越大耗时越久

FaceDetector.Face[] face = new FaceDetector.Face[1];

int faces = faceDetector.findFaces(bitmapDetect, face);

// Log.i(TAG, "getCutBitmap:-00000-------- "+face);

boolean isExistsFace = false;

if (faces > 0) {//检测到人脸

// 获取传回的第一张脸信息

FaceDetector.Face face1 = face[0];

// 获取该部位为人脸的可信度,0~1

float confidence = face1.confidence();

Log.i(TAG, "------人脸可信度---- "+confidence);

// 获取双眼的间距

float eyesDistance = face1.eyesDistance();

Log.i(TAG, "------人脸双眼的间距---- "+eyesDistance);

// 传入X则获取到x方向上的角度,传入Y则获取到y方向上的角度,传入Z则获取到z方向上的角度

float angle = face1.pose(FaceDetector.Face.EULER_X);

if (confidence >= 0.5 || eyesDistance>=0.3){

isExistsFace = true;

}

}

bitmapDetect.recycle();

bitmap.recycle();

_result.success( isExistsFace );

}

uploadRecognition 上传百度进行活体检测 成功后返回相关活体参数,根据不同阈值来判断 。

活体检测成功后,再调用 百度的 addFace 接口,注册人脸到人脸库,我这里没有使用返回的图片,因为太模糊了。直接用了本地拍照识别成功的原图。

2、人脸搜索

活体验证成功后,调用 searchFace 在百度人脸库中搜索人脸信息,搜索成功返回注册的人脸信息。

if (p0 == null ||

!p0.containsKey('result') ||

(p0['result'] == null)) {

hint();

return;

}

Map<String, dynamic> result = p0['result'] as Map<String, dynamic>;

if (result['user_list'] == null ||

(result['user_list'] as List<dynamic>).isEmpty) {

hint();

return;

}

List<dynamic> user_list = result['user_list'] as List<dynamic>;

Map<String, dynamic> user = user_list[0] as Map<String, dynamic>;

if (user['score'] < 80) {

hint();

return;

}这里根据返回的阈值来判断是否是当前人,根据自己要求设置。

好了就到这里了。。。